rate-limiter-flexible limits number of actions by key and protects from DDoS and brute force attacks at any scale.

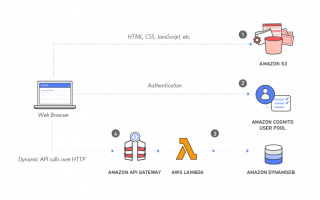

It works with Redis, process Memory, Cluster or PM2, Memcached, MongoDB, MySQL, PostgreSQL and allows to control requests rate in single process or distributed environment.

Atomic increments. All operations in memory or distributed environment use atomic increments against race conditions.

Fast. Average request takes 0.7ms in Cluster and 2.5ms in Distributed application. See benchmarks.

Flexible. Combine limiters, block key for some duration, delay actions, manage failover with insurance options, configure smart key blocking in memory and many others.

Ready for growth. It provides unified API for all limiters. Whenever your application grows, it is ready. Prepare your limiters in minutes.

Friendly. No matter which node package you prefer: redis or ioredis, sequelize or knex, memcached, native driver or mongoose. It works with all of them.

It uses fixed window as it is much faster than rolling window. See comparative benchmarks with other libraries here

Installation

npm i --save rate-limiter-flexible

yarn add rate-limiter-flexible

Basic Example

const opts = { points: 6, // 6 points duration: 1, // Per second}; const rateLimiter = new RateLimiterMemory(opts); rateLimiter.consume(remoteAddress, 2) // consume 2 points .then((rateLimiterRes) => { // 2 points consumed }) .catch((rateLimiterRes) => { // Not enough points to consume });

RateLimiterRes object

Both Promise resolve and reject return object of RateLimiterRes class if there is no any error. Object attributes:

RateLimiterRes = { msBeforeNext: 250, // Number of milliseconds before next action can be done remainingPoints: 0, // Number of remaining points in current duration consumedPoints: 5, // Number of consumed points in current duration isFirstInDuration: false, // action is first in current duration }

You may want to set next HTTP headers to response:

const headers = { "Retry-After": rateLimiterRes.msBeforeNext / 1000, "X-RateLimit-Limit": opts.points, "X-RateLimit-Remaining": rateLimiterRes.remainingPoints, "X-RateLimit-Reset": new Date(Date.now() + rateLimiterRes.msBeforeNext)}

Advantages:

- no race conditions

- no production dependencies

- TypeScript declaration bundled

- in-memory Block Strategy against really powerful DDoS attacks (like 100k requests per sec) Read about it and benchmarking here

- Insurance Strategy as emergency solution if database / store is down Read about Insurance Strategy here

- works in Cluster or PM2 without additional software See RateLimiterCluster benchmark and detailed description here

- shape traffic with Leaky Bucket analogy Read about Leaky Bucket analogy

- useful

get,block,delete,penaltyandrewardmethods

Middlewares and plugins

Some copy/paste examples on Wiki:

- Minimal protection against password brute-force

- Login endpoint protection

- Websocket connection prevent flooding

- Dynamic block duration

- Authorized users specific limits

- Different limits for different parts of application

- Apply Block Strategy

- Setup Insurance Strategy

- Third-party API, crawler, bot rate limiting

Migration from other packages

- express-brute Bonus: race conditions fixed, prod deps removed

- limiter Bonus: multi-server support, respects queue order, native promises

Docs and Examples

- Options

- API methods

- RateLimiterRedis

- RateLimiterMemcache

- RateLimiterMongo (with sharding support)

- RateLimiterMySQL (support Sequelize and Knex)

- RateLimiterPostgres (support Sequelize and Knex)

- RateLimiterCluster (PM2 cluster docs read here)

- RateLimiterMemory

- RateLimiterUnion Combine 2 or more limiters to act as single

- RLWrapperBlackAndWhite Black and White lists

- RateLimiterQueue Rate limiter with FIFO queue